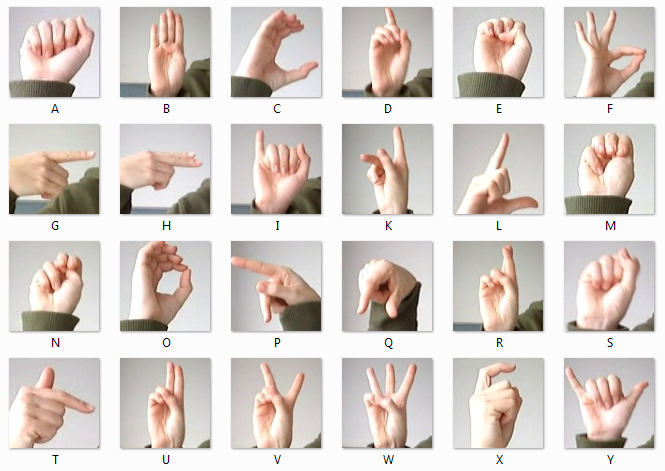

A machine learning model that recognizes hand signs from webcam input and converts them into text and speech in real-time.

A real-time Sign Language Detection & Translator that recognizes hand signs (American Sign Language / Indian Sign Language variants) from a webcam or video feed and converts them into readable text and synthesized speech. The system combines hand-keypoint detection with a deep learning classifier to support continuous or isolated sign recognition. It’s aimed at improving communication between deaf/hard-of-hearing users and those who don’t know sign language.

Tech Stack: Python, TensorFlow, MediaPipe, OpenCV, pyttsx3

Features

-

Real-time webcam sign detection (30+ FPS on mid-range CPU/GPU setups).

-

Supports isolated signs (single letters/words) and short phrase detection.

-

Displays recognized sign as on-screen text and plays synthesized speech.

-

Confidence score and probable alternatives shown to user.

-

Export recognized sequence as a transcript (.txt).

-

Simple web demo (Flask/Streamlit) or desktop demo (Tkinter/Electron wrapper).